Estudio Santa Rita

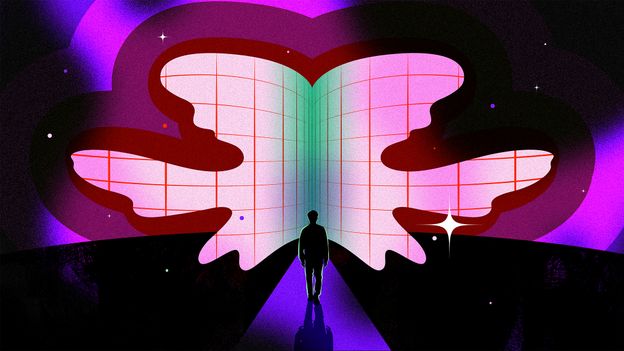

Rorschach tests stimulate human creativity and the mind’s capacity to ascribe meaning to our surroundings – but what insights can AI derive from them?

For over a century, the Rorschach inkblot test has served as a tool for gaining insights into people’s personalities.

Even if you haven’t personally taken one, you are likely familiar with the inkblots that present ambiguous forms on cards. Created by Swiss psychiatrist Hermann Rorschach in 1921, this assessment method presents subjects with a variety of inkblots, prompting them to interpret what they see. The images are intentionally obscure, allowing for open interpretation.

For years, these tests have been popular amongst psychologists seeking to uncover insights into an individual’s psyche based on the images they perceive. This approach relies on the phenomenon known as pareidolia, where people tend to assign meaning to vague images. This is similar to how individuals might discern faces or animals in cloud patterns or on the Moon’s surface.

You may interpret a particular shape as a butterfly, while another might see a skull. Advocates of the test argue that both perspectives provide insights into your thought processes.

“When a person interprets a Rorschach image, they unconsciously project aspects of their psyche, such as fears, desires, and cognitive biases,” explains Barbara Santini, a London-based psychologist who employs Rorschach tests with her clients. “The efficacy of the test lies in the fact that human perception is not merely passive; rather, it is a dynamic process influenced by personal experiences.”

What transpires, however, when you present it to a brain that operates differently, like an artificial intelligence? What insights can an AI algorithm extract from these inkblots, and what can that reveal about the human mind?

Estudio Santa Rita

Bat or moth?

Recent advancements in AI, such as OpenAI’s ChatGPT, allow us to explore this further. These “multimodal models” can process visual inputs as easily as text, thanks to their image-recognition capabilities.

To investigate how AI interprets such images, we tasked ChatGPT with analyzing five of the ten frequently used inkblot images. However, the insights were complicated by the AI’s training, given that its knowledge shapes its interpretations.

AI vs. Human Cognition

This article forms part of the series AI vs. Human Cognition, which examines the capacities of advanced AI while offering insights into the workings of our brains. Each piece juxtaposes various AI technologies against human cognition, addressing questions designed to challenge intelligence limits. Can a machine craft a better joke than a comedian, or unravel ethical dilemmas better than a philosopher? We aim to find out.

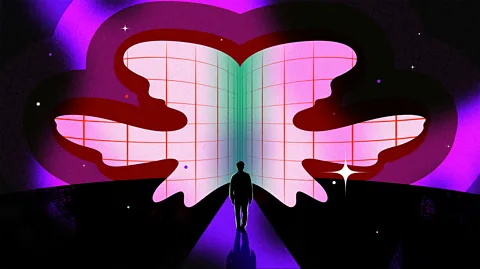

When ChatGPT was shown the first inkblot from an online repository commonly interpreted by humans as a bat, butterfly, or moth, it revealed how literally AI perception operates.

“This image is a Rorschach inkblot frequently employed in psychological evaluations to analyze perception and interpretation,” stated the chatbot. “Its ambiguous design is intended to elicit different responses based on personal experiences, emotions, and imagination.”

Then, without further prompting, it continued to articulate its own interpretation: “For me, it resembles something symmetrical, perhaps two animals or figures facing one another, or a single being with wings open.” The chatbot emphasized, “These inkblots beautifully invite personal interpretations!”

It then queried me about my interpretation of the image, which I opted not to address, instead probing which of the two interpretations it believed was most accurate. “Upon closer examination, I would say it closely resembles a single entity with outstretched wings – possibly a bat or moth, with wings symmetrically spread,” it concluded. “The central portion could be viewed as the body, while elements on the sides evoke wing texture or shape.”

Coen Dekker, a software developer from the Netherlands, remarks, “I suspect that the AI is simply reciting what it knows about the specific test.” A decade ago, Dekker had a neural network, an early prototype of modern AI chatbots, undertake a Rorschach test, albeit in a much simpler manner due to the technological limits of that era.

Ieva Kubiliute, a psychologist based in London, explains, “AI models primarily detect patterns, shapes, and textures within the inkblots. They then correlate these with a vast repository of human responses to derive their interpretations.”

The Essence of Humanity

“While ChatGPT produces intriguing, human-like responses, it likely doesn’t think in a genuine manner; it appears to draw upon an extensive dataset,” comments Chandril Ghosh, a psychology lecturer at the University of Kent, UK, with a focus on mental health and AI. This dynamic is akin to a musician who has never loved but can pen a poignant song by mimicking elements from existing works. As Santini notes, “If an AI’s interpretation resembles that of a human, it’s not due to shared perception but rather the influence of its training corpus reflecting our collective visual culture.”

As a result, what we receive is a reproduction of reality and thought processes. “ChatGPT can describe emotions accurately without experiencing them firsthand,” Ghosh adds. Nonetheless, that doesn’t diminish AI’s relevance for emotional exploration. “AI tools like ChatGPT can comprehend, articulate, and assist individuals in processing their feelings,” he states.

Ghosh emphasizes that ChatGPT essentially regurgitates assorted information from its training dataset, creating an illusion of cognition. This perceived intelligence can be partly attributed to how tech companies market such AI programs. The user-friendly, always helpful demeanor of tools like ChatGPT contributes to their popularity but also complicates perceptions of their true nature.

Estudio Santa Rita

One way to unveil this illusion is by simply refreshing the query, notes Ghosh. Presenting ChatGPT with the same inkblot multiple times can yield varying interpretations within a single session.

When we prompted ChatGPT with the identical inkblot on two separate occasions, it indeed provided different interpretations.

“A human would typically remain consistent in their responses due to the influence of personal experiences and emotions,” explains Ghosh. “Conversely, ChatGPT formulates its answers based on its expansive dataset.”

This raises concerns when attempting to decipher meaning from ChatGPT’s responses regarding inkblots; the chatbot merely reproduces what its training data has encompassed.

A compelling demonstration of this was conducted by researchers from the MIT MediaLab, who trained an AI model called “Norman” (named after the character Norman Bates from Alfred Hitchcock’s film) utilizing images sourced from a Reddit group dedicated to gruesome death imagery. When presented with Rorschach inkblots, Norman’s described impressions evidenced the morbid nature of its training materials. For example, while a different model trained on a more conventional dataset might see birds perched on a tree branch, Norman recounted witnessing a man being electrocuted.

This serves as a stark reminder of the significance of training data in shaping an AI’s output. Train an algorithm on flawed data, and the resultant AI will inevitably reflect those deficiencies.

Estudio Santa Rita

However, the AI’s responses to ambiguous stimuli reveal intriguing insights, notes Dekker, particularly concerning the significance of what it articulates when responding to visuals. “It seems to possess a general understanding of color theory and the emotional reactions they can provoke,” Dekker mentions. This leads him to propose an interesting experiment: “What if we created a fresh set of Rorschach-like images, entirely unknown to the model, and allowed it to analyze those?”

While Dekker isn’t pursuing this, it is known that AI models often “hallucinate” or fabricate information. A notable experiment at MIT showed how researchers could 3D print an object that fooled a machine vision system into misidentifying it. Similarly, minor adjustments to a stop sign can cause it to become invisible to AI, posing potential hazards for autonomous vehicles.

AI excels at pattern recognition, while its variable interpretations of a single ambiguous image highlight a fundamental aspect of the human psyche that AI cannot replicate: the emotions and subconscious meanings we associate with our perceptions. According to Kubiliute, there is no subjectivity in what AI conveys during its interpretation of inkblots. “It lacks the ability to grasp symbolic meaning or emotional resonance that humans naturally attach to certain visuals,” she asserts.

This discrepancy itself provides profound insights into human cognition. “The human mind grapples with internal conflicts, such as the clash between desires and morals,” observes Ghosh. “In contrast, AI operates on strict logic and lacks the emotional struggle that characterizes human decision-making.”