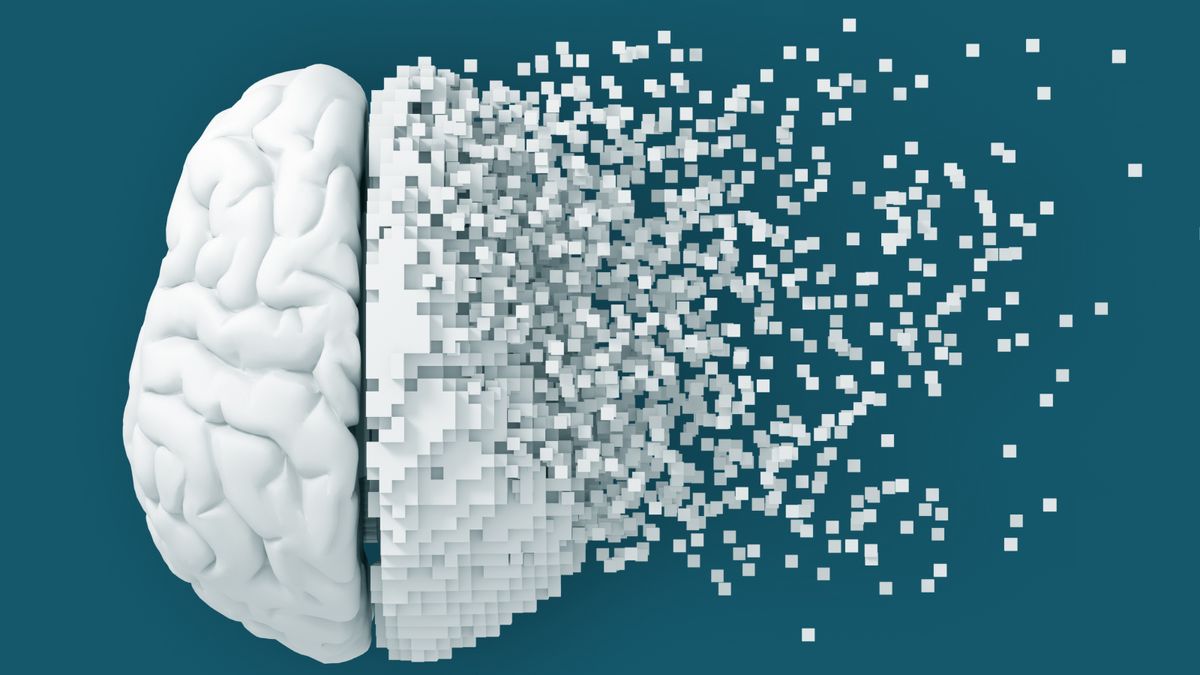

As the reliance on artificial intelligence (AI) for medical diagnoses grows, these advanced tools are proving to be instrumental in identifying anomalies and early warning signs in medical records, X-rays, and various datasets—often before they are apparent to the human eye. However, a recent study published on December 20, 2024, in the BMJ has raised concerns regarding the cognitive decline observed in AI technologies such as large language models (LLMs) and chatbots, paralleling the cognitive aging seen in humans.

The researchers highlight that these findings challenge the prevailing notion that AI could soon replace medical professionals. In their paper, they express concern: “The cognitive decline observed in top chatbots may impact their accuracy in medical diagnostics and diminish patients’ trust.”

The study involved testing widely accessible LLM chatbots, including OpenAI’s ChatGPT, Anthropic’s Sonnet, and Alphabet’s Gemini, using the Montreal Cognitive Assessment (MoCA). This assessment is commonly employed by neurologists to gauge abilities such as attention, memory, language, spatial skills, and higher cognitive functions.

MoCA is particularly useful for detecting early signs of cognitive impairment associated with conditions like Alzheimer’s disease or dementia. Participants are tasked with drawing a clock at a specific time, subtracting seven from 100 repeatedly, and recalling words from a previously heard list. A score of 26 or above (out of 30) indicates no cognitive impairment in humans.

Related: ChatGPT struggles significantly with medical diagnoses

While the LLMs performed adequately in areas like naming, attention, and language, their performance was notably lacking in tasks related to visual/spatial skills and executive functions, with several models scoring lower than others in delayed recall tasks.

Importantly, the latest version of ChatGPT (version 4) achieved the highest score at 26, whereas the older Gemini 1.0 model only scored 16, indicating that older LLMs may exhibit signs of cognitive decline.

The authors of the study emphasize that their findings are observational in nature—highlighting significant differences between AI and human cognitive processes, which means the results cannot be directly compared. However, they warn that these observations point to a “notable area of vulnerability” that could hinder the application of AI in clinical settings. They specifically advise against employing AI for tasks necessitating visual abstraction or executive cognitive functions.

This raises the intriguing possibility of neurologists addressing a new demographic—AI systems that show signs of cognitive decline.